It’s the Right Time to Invest In the Future of Measurement

Telescope Partners invests $21M in Measured to validate the business impact of media investments for DTC brands.

A few months ago, as a steady stream of data policy updates from platforms like Facebook, Apple and Google had marketers feeling less than confident about the future of advertising, we decided to live-stream a discussion with experts to provide some clarity for our clients about the impact of iOS 14.5 on Facebook measurement and performance. The overwhelming response made it clear that brands are hungry for expert insight and tactical guidance for addressing what kept them up last night – not four weeks ago.

Measured is committed to helping brands figure out measurement for continuous data-driven growth, which requires adapting to inevitable and frequent changes that affect our environment.

Things happen fast. Webinars that take two months of planning are no longer relevant and, if we’re being honest, nobody attends for the polished presentations or sales pitch bookends that have become the standard – people show up for useful insight.

In that spirit, we’ve created the Incrementality Insights series. About once a month we’ll conduct a live discussion on a topic you’ve been asking about, led by subject matter experts and drawing on knowledge we’ve uncovered working with ecommerce and media platforms, technology partners, and across our portfolio of brands.

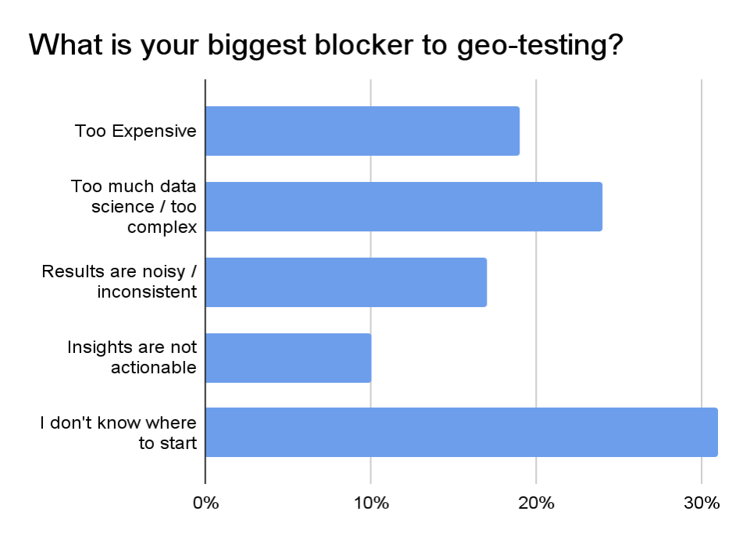

Last week, we tackled the topic of geo experimentation. As user-level tracking and multi-touch attribution are laid to rest, experimentation anchored on 1st party data is the future-proof approach for marketing measurement – and geo-matched market testing is a near-universal approach for measuring incrementality on most media channels, especially for prospecting tactics.

Geo experiments don’t require user-level data, but they can still reveal the incremental contribution of media to any metric that can be collected at the geo level. We’ve heard from some brands that Facebook reps have even recommended running geo-tests to validate results while they work to stabilize reporting after the ripple effects of iOS 14.5 and ATT.

In short, it’s a good time to get to know geo. Watch the video and access the slides from last week for an overview of the methodology, a live demo of geo-experiment design, and a case study showing how one brand used geo-testing to reveal scale potential of YouTube prospecting (they doubled their spend while maintaining target ROAS).

We also had a lively Q&A during the session, which is included below if you’re curious about what your peers are asking.

Want to learn more about geo and incrementality measurement? Read the guide!

| Question | Answer |

| How are conversion attribution windows approached as they vary across platforms? Especially with the iOS 14 impact on Facebook | It essentially represents the denominator in your incrementality percentage calculation. The way we arrive at the number of incremental conversions is independent of in-platform tracking, so you can set the windows however you like. |

| How does the Measured platform determine the list of markets to be included in a test? | We test within a single country, at different geo granularities (State, DMA, City, Zip). The market selection process is based on a correlation analysis driven by a regression model and a ranking process.

This gives us a list of the best test geo-markets to use in your design. |

| What model are you using to predict the conversions? | We have three methods: linear regression, lasso, and flat average. We use the model that comes back with the best result in the A/A analysis. Our most used model is Lasso. |

| What do you use as the source of conversions if not the in-platform tracking? | We’re looking at the change in your actual sales, not in what platforms report. The data comes from your source of truth for transactions, such as your ecommerce platform. |

| This seems like I limited it to large brands that have a large reach across many markets. Is that correct or can you run with only 3-4 markets? Is that recommended? | Smaller brands are actually better suited for tests, since there is a more discernible impact of media on the business. If the brands are limited to certain markets, then they have to take a more granular geo grain for testing like DMA or zip code. |

| Does the market selection model account for other channel spends in-market (mix of national and other geo/local) to control for noise? | Designs are typically deployed to control for certain channels which are being tested. But markets are selected to make sure they are “matched,” meaning the markets being tested on are good candidates to understand “national” impact. |

| So if we heavy up geographically in platform, we can ideally see the outcome in our 1st party data to prove the effect is there? | That’s correct, you can see the scale in your 1st party data in those markets where media heavy up was executed. |

| Given the focus of this webinar on how the marketers can leverage Measured, I am wondering if you can share some insights from your experience on how platforms such as Facebook, Google get involved in this process/partnership – if they do? | We often have marketing sciences representatives from Facebook and Google join discussions about the client’s learning agenda and experiment design considerations. Past that, it’s usually an automated execution. Sometimes they also participate in the interpretation of the results. Generally, they are good partners for clients in navigating the testing agenda. |

| I think you mentioned this is a mid/upper funnel campaign (so may not drive immediate conversion), is the ROAS based on sales/conversions or some sort of intermediary metric like site visitors? | This would be sales/conversions observed within the observation window, some channels have more “tail” past the 30 days, that are not directly measurable. |

| In the YouTube example, how do you account for higher media costs (eCPM) as spend scales? | When the spend gets scaled on the platform on specific markets, CPMs do go up, significantly sometimes, so the marginal CPA/ROAS measured accounts for the CPM environment at higher spend levels. Naturally, auction bidded environments can have more daily fluctuations, but we are looking to understand large scale changes, so the CPM changes can be used directionally. |

| Do you run market tests on other demographic levels than DMAs? E.g. zip codes | We do. Our product can support State-DMA-City-Zip designs. (DMA is US only). |

| One of the challenges out there is that clients in certain verticals (such as CPG) don’t always have access to 1st party conversion data. What is your recommendation/solution (if any) for them if they want to be able to run Geo Lift studies? | Retailer based 3rd party transaction data is challenging. At the very least. You need data available with the state/DMA/zip geo grain in them to enable testing. Else, you are better off using a MMM approach |

| Brands that are CPG focused working with several retailers have several weeks of lag time on sales data reporting. How would you design an experiment for them to account for that lag? | In theory, this wouldn’t hurt our design or read process; it would just take longer for your read to arrive. |

| Is Measured looking at Geo-testing as an alternative to understanding long-term impact of channels/media, in particular for businesses with an extended sales cycle + slow time to convert (generally $1000+ AOV, 1+ months average time to convert)? | Typically, sales that take a really long time to convert, like a car, are not great candidates for geo testing. Consideration cycles under a month are good candidates for geo. |

| Regarding your introductory examples: Isn’t your design too simple because there are cells missing? What about interaction effects between YT and Paid Search (PLA and Paid Brand Search) (i.e., Scale-up & Scale-up vs. Scale-up & Hold-out vs. Hold-up & Scale-up vs. Hold-up & Hold-up)? | We will typically design more cells (this was a quick/simple demo). As Nick mentioned, you can get more creative and sophisticated with the design. It all depends on the business questions you are trying to answer.

On the reporting side, we will also do an analysis on the source/medium to see if there is an interactive effect in the reporting. |