Press Can I Measure Incrementality for Google?

Original Publisher

The marginal incremental contribution of Google products (Search/PLA, Display, Video) on business outcomes can absolutely be measured. There are multiple methodologies to measure its contribution to conversions, revenue, LTV, etc.

Measuring incrementality on Google can be accomplished in any of the following ways:

- Design of Experiments (DoE): Carefully designed experiments that control for targeted audiences, overlap, campaign structures and optimization algorithms are the most transparent and granular way to measure impact on your business metrics. Experiments have to be designed around these campaign specific levers to control the factors relevant for the marketing experiments. Typically channels within Google’s Adwords platform are tested via a geo-matched market approach. A handful of small markets are identified as representative of the national market for a brand. On these “test markets” the desired media treatments on the Google channels are executed. The results from these markets are then compared to other larger markets – the difference in performance metrics (like conversion rates, revenue per user) between the test and control markets are then interpreted to inform incrementality.

- Lift Study: In some cases, if the advertiser is running enough spend through a channel, Google may offer to run a lift study for the brand. The study is run as a managed services offering where the design and execution is taken on by the Google account team. Google may use a ghost ads approach or a geo approach in the background to run the study and report back results to the brand.

- Marketing Mix Models (MMM): This approach uses aggregate marketing data rolled up at a week, or month-level, into a time series which is then fed to a regression model for estimating the impact of Google on business metrics. This is a top down approach and results tend to be very macro in nature, providing an average impact of Google investments over a quarter, or longer.MMM is not useful in breaking down the impact estimation by campaign or tactic, so it’s less appropriate for short-term tactical planning. Also in practice, these models take a while to build and stabilize, which could mean 6-12 weeks of lag from end of a quarter to results reporting.

- Multi-Touch Attribution (MTA): This approach ingests user-level data collected, or leveraging other third party tracking technologies, on all ad exposures to construct consumer journeys which are then fed into a machine learning algorithm to decompose the impact of each ad exposure and its effectiveness in driving a business result. The strength of this approach is extreme granularity of the reporting and the insight into customer journeys. More recently with the advent of privacy regulation and Google outlawing user-level third party tracking, the collection of this kind of data has been nearly eliminated except in very special cases. Even when this data was being collected, the measurement would only be correlational out-of-the-box.

For tactical and timely measurement, DoE is the primary approach preferred by marketers to inform incrementality, especially for performance driven acquisition marketers. In some cases, marketers employ Google’s lift studies to get another read. When available, multiple incrementality reads are beneficial as they provide different perspectives on the impact of Google’s advertising on their business.

DoE – Pros & Cons:

DoE is typically executed by either the brand or by a third party vendor like Measured. DoEs can be designed to be very tactical and shaped to meet a diverse set of learning objectives for marketers. It can be executed independent of Google, and hence offers the highest levels of control and transparency in executing experiments that match marketers’ learning objectives. All of the observations are captured through normal campaign reporting methods, leaving marketers to make inferences about campaign performance without any opaqueness to the methods of data collection. It’s strengths therefore lie in being fully transparent and neutral, while preserving tactical granularity of measurement.

Google Lift studies – Pros & Cons:

Lift studies are typically conducted by the platforms, in this case Google, and are typically executed via a ghost ads counterfactual approach or a geo-based approach. In the ghost ads approach, the ad delivery systems within Google implement a version of what’s called the ghost ads framework to collect data about audiences who matched a campaign criteria but were not served an ad because of other constraints, like budgets and competitive bids, in the auction. These audiences are then placed into a control audience whose performance is reported alongside the audiences who were exposed to campaign creatives. This allows marketers to read the lift of a campaign without actually selecting control audiences and executing a control treatment. The geo-based approach, similar to the geo-matched market test, is the preferred method when a clean audience split test is not available. Audiences are split by geo and a strong read can be attained.

The primary advantage of using a platform lift study to get a read on the platform’s contribution is that sometimes they are offered at no cost to the brand, whereas there is a cost associated with running a PSA ad (another tactic for measuring ad effectiveness). For many marketers, the primary objection of any publisher led counterfactual study is neutrality: having the publisher grade its homework. This has led many marketers to seek a non-biased publisher agnostic advanced analytics partner, like Measured.

Google offers lift studies for their products: Search, Shopping, Display and Video. Incrementality measurement is a strong alternative to the lift studies and can accurately reveal the contribution of Google advertising.

Can I Measure Incrementality for Google?

This was originally published onThe marginal incremental contribution of Google products (Search/PLA, Display, Video) on business outcomes can absolutely be measured. There are multiple methodologies to measure its contribution to conversions, revenue, LTV, etc.

Measuring incrementality on Google can be accomplished in any of the following ways:

- Design of Experiments (DoE): Carefully designed experiments that control for targeted audiences, overlap, campaign structures and optimization algorithms are the most transparent and granular way to measure impact on your business metrics. Experiments have to be designed around these campaign specific levers to control the factors relevant for the marketing experiments. Typically channels within Google’s Adwords platform are tested via a geo-matched market approach. A handful of small markets are identified as representative of the national market for a brand. On these “test markets” the desired media treatments on the Google channels are executed. The results from these markets are then compared to other larger markets – the difference in performance metrics (like conversion rates, revenue per user) between the test and control markets are then interpreted to inform incrementality.

- Lift Study: In some cases, if the advertiser is running enough spend through a channel, Google may offer to run a lift study for the brand. The study is run as a managed services offering where the design and execution is taken on by the Google account team. Google may use a ghost ads approach or a geo approach in the background to run the study and report back results to the brand.

- Marketing Mix Models (MMM): This approach uses aggregate marketing data rolled up at a week, or month-level, into a time series which is then fed to a regression model for estimating the impact of Google on business metrics. This is a top down approach and results tend to be very macro in nature, providing an average impact of Google investments over a quarter, or longer.MMM is not useful in breaking down the impact estimation by campaign or tactic, so it’s less appropriate for short-term tactical planning. Also in practice, these models take a while to build and stabilize, which could mean 6-12 weeks of lag from end of a quarter to results reporting.

- Multi-Touch Attribution (MTA): This approach ingests user-level data collected, or leveraging other third party tracking technologies, on all ad exposures to construct consumer journeys which are then fed into a machine learning algorithm to decompose the impact of each ad exposure and its effectiveness in driving a business result. The strength of this approach is extreme granularity of the reporting and the insight into customer journeys. More recently with the advent of privacy regulation and Google outlawing user-level third party tracking, the collection of this kind of data has been nearly eliminated except in very special cases. Even when this data was being collected, the measurement would only be correlational out-of-the-box.

For tactical and timely measurement, DoE is the primary approach preferred by marketers to inform incrementality, especially for performance driven acquisition marketers. In some cases, marketers employ Google’s lift studies to get another read. When available, multiple incrementality reads are beneficial as they provide different perspectives on the impact of Google’s advertising on their business.

DoE – Pros & Cons:

DoE is typically executed by either the brand or by a third party vendor like Measured. DoEs can be designed to be very tactical and shaped to meet a diverse set of learning objectives for marketers. It can be executed independent of Google, and hence offers the highest levels of control and transparency in executing experiments that match marketers’ learning objectives. All of the observations are captured through normal campaign reporting methods, leaving marketers to make inferences about campaign performance without any opaqueness to the methods of data collection. It’s strengths therefore lie in being fully transparent and neutral, while preserving tactical granularity of measurement.

Google Lift studies – Pros & Cons:

Lift studies are typically conducted by the platforms, in this case Google, and are typically executed via a ghost ads counterfactual approach or a geo-based approach. In the ghost ads approach, the ad delivery systems within Google implement a version of what’s called the ghost ads framework to collect data about audiences who matched a campaign criteria but were not served an ad because of other constraints, like budgets and competitive bids, in the auction. These audiences are then placed into a control audience whose performance is reported alongside the audiences who were exposed to campaign creatives. This allows marketers to read the lift of a campaign without actually selecting control audiences and executing a control treatment. The geo-based approach, similar to the geo-matched market test, is the preferred method when a clean audience split test is not available. Audiences are split by geo and a strong read can be attained.

The primary advantage of using a platform lift study to get a read on the platform’s contribution is that sometimes they are offered at no cost to the brand, whereas there is a cost associated with running a PSA ad (another tactic for measuring ad effectiveness). For many marketers, the primary objection of any publisher led counterfactual study is neutrality: having the publisher grade its homework. This has led many marketers to seek a non-biased publisher agnostic advanced analytics partner, like Measured.

Original Publisher

Google offers lift studies for their products: Search, Shopping, Display and Video. Incrementality measurement is a strong alternative to the lift studies and can accurately reveal the contribution of Google advertising.

Ready to see how trusted measurement can help your brand make smarter media decisions?

Get a Demo

Get a Demo

Talk to Us!

Learn how our incrementality measurement drives smarter cross-channel media investment decisions.

Press Can I Measure Incrementality on Facebook?

Original Publisher

Can I Measure Incrementality on Facebook?

This was originally published onYes, the incremental impact of Facebook advertising on business outcomes like conversions, revenue and profit can be measured.

There are 4 possible advanced marketing measurement techniques that can be used:

- Design of Experiments (DoE): Carefully designed experiments that control for targeted audiences, audience overlap, facebook campaign structures, optimization algorithms etc., are the most transparent, granular and accessible way to measure impact of Facebook campaigns on business metrics like sales and revenue. Typically in a Facebook DoE a small portion of audience being targeted is held out for control treatment, while the rest of the campaign audiences are treated to regular campaign creative and campaign treatment. The differences in response rates between the two treatments is analyzed to calculate incrementality.

- Counterfactual studies: This approach is an alternate method for measuring incrementality by using an natural experimentation approach (vs designed experimentation approach like #1) to measure incrementality. This approach uses data generated by auction systems within Facebook to observe audiences who “match” the audiences targeted for a campaign, but were not targeted because of various factors (eg: budget, ad auction competitiveness, etc.) and treat them as though they were deliberately held-out for a control treatment. The campaign exposed audiences and the synthetically constructed hold-out audiences are then analyzed for their response rates to calculate incrementality.

- Marketing Mix Models (MMM): This approach uses aggregate data rolled up at a week or month-level into a time series which is then fed to a regression model for estimating the impact of Facebook on business metrics. Because of the nature of the approach, results tend to be very macro in nature, providing an average impact of Facebook investments over a quarter. It is not very useful in breaking down the impact estimation by Campaign or tactic so it’s less appropriate for short-term tactical planning. Also in practice, these models take a while to build and stabilize, which could mean 6-12 weeks of lag from end of a quarter to results reporting.

- Multi-touch Attribution (MTA): This approach uses user-level data collected via pixels on all ad exposures to construct consumer journeys which are then fed to a machine learning algorithm to decompose the impact of each ad exposure in driving business results. The strength of this approach is extreme granularity of the reporting and the insight into customer journeys. More recently with the advent of privacy regulation and Facebook outlawing user-level 3rd party tracking, the collection of this kind of data has been nearly eliminated except in very special cases. Even when this data was being collected, the measurement would only be correlational out-of-the-box.

For tactical and timely measurement therefore, DoEs and Counterfactual studies are the primary approaches preferred by marketers, especially performance marketers. In many cases marketers use both to get multiple reads and perspectives on the impact of a Facebook advertising investment on their business.

DoE – Pros & Cons:

DoE is typically executed by either the brand or by a 3rd party vendor like Measured. DoEs can be designed to be very granular and shaped to meet learning diverse objectives for marketers. It can be executed independent of Facebook’s account teams, and hence offers the highest levels of control and transparency in executing experiments that match marketers’ learning objectives. Since all of the observations are captured through normal campaign reporting methods, leaving marketers to make inferences about campaign performance without any opaqueness to the methods of data collection. It’s strengths therefore lie in being fully transparent and neutral, while preserving granularity of measurement (eg: ad set-level, audience-level measurements). However, to implement the control treatment, marketers have to serve up a PSA (public service announcement) ad from their Facebook account, which can cost ~1% of their total Facebook budgets. The PSA costs can be minimized by narrowing the learning objectives and focusing on testing the most important audiences.

Counterfactual studies – Pros & Cons:

Counterfactual studies are typically conducted by the platform themselves, in this case Facebook. This feature within Facebook is called Lift testing. The ad delivery systems within Facebook implement a version of what’s called the ghost ads framework to collect data about audiences who matched a campaign criteria but were not served an ad because of other constraints, like budgets and competitive bids, in the auction. These audiences are then synthesized into a control audience whose performance is reported alongside the audiences who were exposed to campaign creatives. This allows marketers to read the lift of a campaign without actually selecting control audiences and executing a control treatment.

The primary advantages of using counterfactual studies is that marketers don’t have to spend any PSA dollars to implement the control treatment (since control audiences are synthesized by Facebook). However, it introduces blind spots into the audience selection and data reporting process, which can be swayed easily by oversampling or undersampling of audiences within an ad set or campaign or date parts or other factors.

The lift reporting within Facebook is also not granular. It does not allow marketers to look at the reporting by week / month, or constituent ad sets and audiences within a campaign (unless it was set up deliberately to be read that way).

For many marketers though, the primary objection of Facebook counterfactual studies is neutrality: having Facebook grade its own homework.

Video: Facebook Incrementality Measurement

Experimentation is a fully transparent and neutral approach to Facebook incrementality measurement and can preserve the granularity of measurement (eg: campaign, audience or ad-set level).

Can I Measure Incrementality on Facebook?

This was originally published onYes, the incremental impact of Facebook advertising on business outcomes like conversions, revenue and profit can be measured.

There are 4 possible advanced marketing measurement techniques that can be used:

- Design of Experiments (DoE): Carefully designed experiments that control for targeted audiences, audience overlap, facebook campaign structures, optimization algorithms etc., are the most transparent, granular and accessible way to measure impact of Facebook campaigns on business metrics like sales and revenue. Typically in a Facebook DoE a small portion of audience being targeted is held out for control treatment, while the rest of the campaign audiences are treated to regular campaign creative and campaign treatment. The differences in response rates between the two treatments is analyzed to calculate incrementality.

- Counterfactual studies: This approach is an alternate method for measuring incrementality by using an natural experimentation approach (vs designed experimentation approach like #1) to measure incrementality. This approach uses data generated by auction systems within Facebook to observe audiences who “match” the audiences targeted for a campaign, but were not targeted because of various factors (eg: budget, ad auction competitiveness, etc.) and treat them as though they were deliberately held-out for a control treatment. The campaign exposed audiences and the synthetically constructed hold-out audiences are then analyzed for their response rates to calculate incrementality.

- Marketing Mix Models (MMM): This approach uses aggregate data rolled up at a week or month-level into a time series which is then fed to a regression model for estimating the impact of Facebook on business metrics. Because of the nature of the approach, results tend to be very macro in nature, providing an average impact of Facebook investments over a quarter. It is not very useful in breaking down the impact estimation by Campaign or tactic so it’s less appropriate for short-term tactical planning. Also in practice, these models take a while to build and stabilize, which could mean 6-12 weeks of lag from end of a quarter to results reporting.

- Multi-touch Attribution (MTA): This approach uses user-level data collected via pixels on all ad exposures to construct consumer journeys which are then fed to a machine learning algorithm to decompose the impact of each ad exposure in driving business results. The strength of this approach is extreme granularity of the reporting and the insight into customer journeys. More recently with the advent of privacy regulation and Facebook outlawing user-level 3rd party tracking, the collection of this kind of data has been nearly eliminated except in very special cases. Even when this data was being collected, the measurement would only be correlational out-of-the-box.

For tactical and timely measurement therefore, DoEs and Counterfactual studies are the primary approaches preferred by marketers, especially performance marketers. In many cases marketers use both to get multiple reads and perspectives on the impact of a Facebook advertising investment on their business.

DoE – Pros & Cons:

DoE is typically executed by either the brand or by a 3rd party vendor like Measured. DoEs can be designed to be very granular and shaped to meet learning diverse objectives for marketers. It can be executed independent of Facebook’s account teams, and hence offers the highest levels of control and transparency in executing experiments that match marketers’ learning objectives. Since all of the observations are captured through normal campaign reporting methods, leaving marketers to make inferences about campaign performance without any opaqueness to the methods of data collection. It’s strengths therefore lie in being fully transparent and neutral, while preserving granularity of measurement (eg: ad set-level, audience-level measurements). However, to implement the control treatment, marketers have to serve up a PSA (public service announcement) ad from their Facebook account, which can cost ~1% of their total Facebook budgets. The PSA costs can be minimized by narrowing the learning objectives and focusing on testing the most important audiences.

Counterfactual studies – Pros & Cons:

Counterfactual studies are typically conducted by the platform themselves, in this case Facebook. This feature within Facebook is called Lift testing. The ad delivery systems within Facebook implement a version of what’s called the ghost ads framework to collect data about audiences who matched a campaign criteria but were not served an ad because of other constraints, like budgets and competitive bids, in the auction. These audiences are then synthesized into a control audience whose performance is reported alongside the audiences who were exposed to campaign creatives. This allows marketers to read the lift of a campaign without actually selecting control audiences and executing a control treatment.

The primary advantages of using counterfactual studies is that marketers don’t have to spend any PSA dollars to implement the control treatment (since control audiences are synthesized by Facebook). However, it introduces blind spots into the audience selection and data reporting process, which can be swayed easily by oversampling or undersampling of audiences within an ad set or campaign or date parts or other factors.

The lift reporting within Facebook is also not granular. It does not allow marketers to look at the reporting by week / month, or constituent ad sets and audiences within a campaign (unless it was set up deliberately to be read that way).

For many marketers though, the primary objection of Facebook counterfactual studies is neutrality: having Facebook grade its own homework.

Video: Facebook Incrementality Measurement

Original Publisher

Experimentation is a fully transparent and neutral approach to Facebook incrementality measurement and can preserve the granularity of measurement (eg: campaign, audience or ad-set level).

Ready to see how trusted measurement can help your brand make smarter media decisions?

Get a Demo

Get a Demo

Talk to Us!

Learn how our incrementality measurement drives smarter cross-channel media investment decisions.

Press What is A/B Testing for Media?

Original Publisher

What is A/B Testing for Media?

This was originally published onA/B testing is a specialized type of incrementality measurement. In A/B testing randomized groups are shown a variant of a single variable (web design, landing page, marketing creative etc.) in order to determine which variant is more effective.

Incrementality measurements use A/B testing in certain media or general marketing channels, such as prospecting, where tracking a media exposure for both the test and control groups is required. In the case of media incrementality the A group (test group) is shown business as usual media exposure while the B group (control group) has exposure withheld or is shown a null media exposure, typically a public service announcement (PSA) for a charity of the marketers choice. The more generic form of A/B testing is called Design of Experiments (DoE).

Easily Run A/B Tests with Measured

We have worked hard to plug-in directly to 100+ media platforms and their APIs. Because of this, Measured provides incrementality measurement and testing with ease and speed. We can then run 100s of audience-level experiments with a quick one-time set up with your publishers. Find out media’s true contribution across all your addressable and non-addressable channels.

Learn more about measured product here.

Read the DTC Marketer’s Guide to Incrementality Measurement.

In marketing, A/B testing is a specialized type of incrementality measurement and is very effective when measuring the marginal lift of a media exposure.

What is A/B Testing for Media?

This was originally published onA/B testing is a specialized type of incrementality measurement. In A/B testing randomized groups are shown a variant of a single variable (web design, landing page, marketing creative etc.) in order to determine which variant is more effective.

Incrementality measurements use A/B testing in certain media or general marketing channels, such as prospecting, where tracking a media exposure for both the test and control groups is required. In the case of media incrementality the A group (test group) is shown business as usual media exposure while the B group (control group) has exposure withheld or is shown a null media exposure, typically a public service announcement (PSA) for a charity of the marketers choice. The more generic form of A/B testing is called Design of Experiments (DoE).

Easily Run A/B Tests with Measured

We have worked hard to plug-in directly to 100+ media platforms and their APIs. Because of this, Measured provides incrementality measurement and testing with ease and speed. We can then run 100s of audience-level experiments with a quick one-time set up with your publishers. Find out media’s true contribution across all your addressable and non-addressable channels.

Learn more about measured product here.

Read the DTC Marketer’s Guide to Incrementality Measurement.

Original Publisher

In marketing, A/B testing is a specialized type of incrementality measurement and is very effective when measuring the marginal lift of a media exposure.

Ready to see how trusted measurement can help your brand make smarter media decisions?

Get a Demo

Get a Demo

Talk to Us!

Learn how our incrementality measurement drives smarter cross-channel media investment decisions.

Press Can I Operationalize Media Channel Experimentation in Steady State?

Original Publisher

Can I Operationalize Media Channel Experimentation in Steady State?

This was originally published onIn short, yes. Experimentation relies on steady state operation of your media channels as the vast majority of your customer base, receiving business as usual media exposure, will comprise the all important test group in the experimental design. Good experimentation carefully selects out a small, but representative subset of your customers and withholds media exposure at the channel level in order to serve as the test group which will bear out the true incremental sales driven by channel level media exposure.

Operationalize Media Channel Experimentation with Measured

We have worked hard to plug in directly to 100+ media platforms and their APIs. Because of this, Measured provides incrementality measurement and testing with ease and speed. We can then run 100s of audience-level experiments with a quick one-time set up with your publishers. Find out media’s true contribution across all your addressable and non-addressable channels.

A clean read for experimentation requires a carefully selected and small, but representative subset of your customers to withhold media exposure.

Can I Operationalize Media Channel Experimentation in Steady State?

This was originally published onIn short, yes. Experimentation relies on steady state operation of your media channels as the vast majority of your customer base, receiving business as usual media exposure, will comprise the all important test group in the experimental design. Good experimentation carefully selects out a small, but representative subset of your customers and withholds media exposure at the channel level in order to serve as the test group which will bear out the true incremental sales driven by channel level media exposure.

Operationalize Media Channel Experimentation with Measured

We have worked hard to plug in directly to 100+ media platforms and their APIs. Because of this, Measured provides incrementality measurement and testing with ease and speed. We can then run 100s of audience-level experiments with a quick one-time set up with your publishers. Find out media’s true contribution across all your addressable and non-addressable channels.

Original Publisher

A clean read for experimentation requires a carefully selected and small, but representative subset of your customers to withhold media exposure.

Ready to see how trusted measurement can help your brand make smarter media decisions?

Get a Demo

Get a Demo

Talk to Us!

Learn how our incrementality measurement drives smarter cross-channel media investment decisions.

Press What Is a Design of Experiments (DOE) with Respect to Marketing?

Original Publisher

What Is a Design of Experiments (DOE) with Respect to Marketing?

This was originally published onDesign of Experiments (DOE) in marketing is a systematic method to design experiments to measure the impact of marketing campaigns. DoE is a method to ensure that variables are properly controlled, the lift measurement at the end of the experiment is properly assessed and the sample size requirements are properly estimated.

DoEs can be designed for most major types of media, like Facebook, Search, TV, display, etc.

How do you design an experiment for marketing campaigns?

Experiments are usually designed to understand the impact of a marketing campaign on desired marketing objectives. A simplistic design to measure certain marketing stimuli like a TV campaign or a Facebook campaign is a 2-cell experiment, where the marketing campaign is published to a certain group of users and held out to another group of users. The response behaviors of the two user groups are then observed over a period of time. The impact of the marketing campaign is then assessed as the difference in response rates between those two user groups.

The science of experimental design applied to marketing is about carefully selecting and controlling the variables that affect outcomes, designing the approach for sample size sufficiency, and tailoring the overall design to have enough power to read the phenomenon being observed.

What are factors in experimental design for marketing campaigns?

The factors to be controlled depend on the phenomenon being measured. But in general, some of the factors that play a critical role in marketing that are candidates to be controlled are: marketing spend, campaign reach, impression frequency, audience quality, audience type, conversion rates, seasonality, collinearity and interaction effects.

Each marketing channel, like Facebook or TV or Google Search, each have their own unique campaign management levers to control audience reach, spend, frequency, etc. The challenge designing proper experiments is to apply experimental design principles to the specific channels and how they are typically operated by marketers.

Basic Principles of Experimental Design in marketing measurement

Learning objectives: The first and foremost thing is to identify objectives that are meaningful to measure. Typically, these are sales and other business outcomes that marketing campaigns are looking to drive.

Audiences and Platforms: Each marketing platform like Facebook and Google have very specific ways to activate audiences and market to them. Experiments have to be designed around these campaign specific levers to control the factors relevant for the marketing experiments.

Decisions: Marketers make specific decisions around campaigns, like campaign budgets, campaign bids, creative choices, audience choices etc., Experiments have to be designed to inform the specific choices at the level of granularity that is meaningful for marketers.

How does experimental design differ from A/B testing?

Design of Experiments is a formal method for designing tests. A/B tests are a simple form of a two-cell experiment. Typically industrial scale experiments are generally multivariate in nature, maybe 2-cells or more, and designed carefully to control for various factors to enable flighting the experiment and collecting data in very specific ways to enable getting a clean usable read.

Design of Experiments (DoE) Examples

Many marketing platforms enable experimentation deliberately or coincidentally. In platforms like Facebook it is possible to select and target audiences in randomized ways but target them differentially. This enables marketers to design experiments and test audiences for different marketing treatments. Similar approaches are taken in tactics like site retargeting where audiences are split into segments and various segments are offered differential treatments, like retargeting some segments, and holding out other segments from retargeting, and observe the behavior of response over a period of time.

How Does MTA Attribution & DOE Experiments Work Together?

MTA and DoE are complementary because incrementality testing addresses many of the data and data tracking gaps that currently serve as severe limitations to MTA’s ability to measure marketing contribution across all addressable marketing channels.

Currently MTA has a major data gap in the so-called walled gardens (Facebook, AdWords, Instagram, Pinterest, YouTube etc.) in which no customer level data gathering is permitted. MTA has no answers for these channels with no clear avenues for improvement short of a 180 degree reverse of course on data sharing by the likes of Facebook (don’t hold your breath). Even in trackable addressable media channels, pixel related data loss can be severe, ranging from 5% in paid search to as much as 80% in channels like online video. While cookie level data tracking has lower rates of data loss, it’s ongoing viability is in question after Google recently announced the discontinued sharing of Google User IDs that this approach relies upon beginning in Q1 2020. DoE can both fill the gaps created by the so-called “walled garden” media channels as well as validate and inform media channels suffering from pixel related data loss. As the market continues to evolve, and legislation to address privacy concerns like GDPR & CCPA proliferate, MTA measurement unsupported by DoE will likely become obsolete.

Experiments must be designed to inform the specific questions marketers have about their paid media and inform a level of granularity that is meaningful.

What Is a Design of Experiments (DOE) with Respect to Marketing?

This was originally published onDesign of Experiments (DOE) in marketing is a systematic method to design experiments to measure the impact of marketing campaigns. DoE is a method to ensure that variables are properly controlled, the lift measurement at the end of the experiment is properly assessed and the sample size requirements are properly estimated.

DoEs can be designed for most major types of media, like Facebook, Search, TV, display, etc.

How do you design an experiment for marketing campaigns?

Experiments are usually designed to understand the impact of a marketing campaign on desired marketing objectives. A simplistic design to measure certain marketing stimuli like a TV campaign or a Facebook campaign is a 2-cell experiment, where the marketing campaign is published to a certain group of users and held out to another group of users. The response behaviors of the two user groups are then observed over a period of time. The impact of the marketing campaign is then assessed as the difference in response rates between those two user groups.

The science of experimental design applied to marketing is about carefully selecting and controlling the variables that affect outcomes, designing the approach for sample size sufficiency, and tailoring the overall design to have enough power to read the phenomenon being observed.

What are factors in experimental design for marketing campaigns?

The factors to be controlled depend on the phenomenon being measured. But in general, some of the factors that play a critical role in marketing that are candidates to be controlled are: marketing spend, campaign reach, impression frequency, audience quality, audience type, conversion rates, seasonality, collinearity and interaction effects.

Each marketing channel, like Facebook or TV or Google Search, each have their own unique campaign management levers to control audience reach, spend, frequency, etc. The challenge designing proper experiments is to apply experimental design principles to the specific channels and how they are typically operated by marketers.

Basic Principles of Experimental Design in marketing measurement

Learning objectives: The first and foremost thing is to identify objectives that are meaningful to measure. Typically, these are sales and other business outcomes that marketing campaigns are looking to drive.

Audiences and Platforms: Each marketing platform like Facebook and Google have very specific ways to activate audiences and market to them. Experiments have to be designed around these campaign specific levers to control the factors relevant for the marketing experiments.

Decisions: Marketers make specific decisions around campaigns, like campaign budgets, campaign bids, creative choices, audience choices etc., Experiments have to be designed to inform the specific choices at the level of granularity that is meaningful for marketers.

How does experimental design differ from A/B testing?

Design of Experiments is a formal method for designing tests. A/B tests are a simple form of a two-cell experiment. Typically industrial scale experiments are generally multivariate in nature, maybe 2-cells or more, and designed carefully to control for various factors to enable flighting the experiment and collecting data in very specific ways to enable getting a clean usable read.

Design of Experiments (DoE) Examples

Many marketing platforms enable experimentation deliberately or coincidentally. In platforms like Facebook it is possible to select and target audiences in randomized ways but target them differentially. This enables marketers to design experiments and test audiences for different marketing treatments. Similar approaches are taken in tactics like site retargeting where audiences are split into segments and various segments are offered differential treatments, like retargeting some segments, and holding out other segments from retargeting, and observe the behavior of response over a period of time.

How Does MTA Attribution & DOE Experiments Work Together?

MTA and DoE are complementary because incrementality testing addresses many of the data and data tracking gaps that currently serve as severe limitations to MTA’s ability to measure marketing contribution across all addressable marketing channels.

Currently MTA has a major data gap in the so-called walled gardens (Facebook, AdWords, Instagram, Pinterest, YouTube etc.) in which no customer level data gathering is permitted. MTA has no answers for these channels with no clear avenues for improvement short of a 180 degree reverse of course on data sharing by the likes of Facebook (don’t hold your breath). Even in trackable addressable media channels, pixel related data loss can be severe, ranging from 5% in paid search to as much as 80% in channels like online video. While cookie level data tracking has lower rates of data loss, it’s ongoing viability is in question after Google recently announced the discontinued sharing of Google User IDs that this approach relies upon beginning in Q1 2020. DoE can both fill the gaps created by the so-called “walled garden” media channels as well as validate and inform media channels suffering from pixel related data loss. As the market continues to evolve, and legislation to address privacy concerns like GDPR & CCPA proliferate, MTA measurement unsupported by DoE will likely become obsolete.

Original Publisher

Experiments must be designed to inform the specific questions marketers have about their paid media and inform a level of granularity that is meaningful.

Ready to see how trusted measurement can help your brand make smarter media decisions?

Get a Demo

Get a Demo

Talk to Us!

Learn how our incrementality measurement drives smarter cross-channel media investment decisions.

Press How Do You Calculate Incremental Sales Driven by a Media Tactic?

Original Publisher

How Do You Calculate Incremental Sales Driven by a Media Tactic?

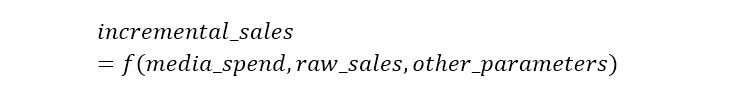

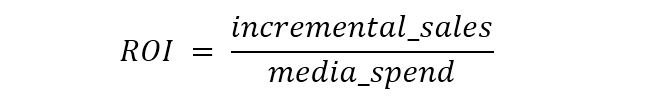

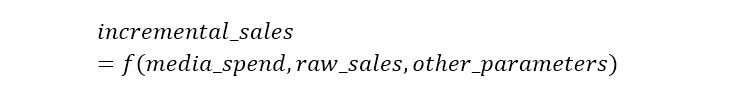

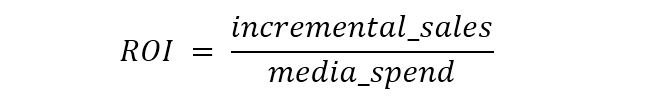

This was originally published onThe impact of a marketing tactic (eg: Facebook prospecting, retargeting, catalog, direct mail, national TV) is typically measured in terms of incremental sales driven by that tactic. Incremental sales driven by a media tactic are calculated using advanced marketing measurement techniques. There are three major types of advanced marketing measurement techniques.

- Design of Experiments (DoE)

- Marketing Mix Modeling (MMM)

- Multi-Touch Attribution (MTA)

Once incremental sales are calculated for a tactic using one of the above techniques, the ROI for that tactic is calculated using the following formula.

Incremental sales driven by a media tactic are calculated using advanced marketing measurement techniques like experimentation, MMM or MTA.

How Do You Calculate Incremental Sales Driven by a Media Tactic?

This was originally published onThe impact of a marketing tactic (eg: Facebook prospecting, retargeting, catalog, direct mail, national TV) is typically measured in terms of incremental sales driven by that tactic. Incremental sales driven by a media tactic are calculated using advanced marketing measurement techniques. There are three major types of advanced marketing measurement techniques.

- Design of Experiments (DoE)

- Marketing Mix Modeling (MMM)

- Multi-Touch Attribution (MTA)

Once incremental sales are calculated for a tactic using one of the above techniques, the ROI for that tactic is calculated using the following formula.

Original Publisher

Incremental sales driven by a media tactic are calculated using advanced marketing measurement techniques like experimentation, MMM or MTA.

Ready to see how trusted measurement can help your brand make smarter media decisions?

Get a Demo

Get a Demo

Talk to Us!

Learn how our incrementality measurement drives smarter cross-channel media investment decisions.